I Got Your AI Ethics Right Here |619|

Podcast: Play in new window | Download

Subscribe: RSS

Conversations about AI ethics with Miguel Connor, Nipun Mehta, Tree of Truth Podcast, and Richard Syrett.

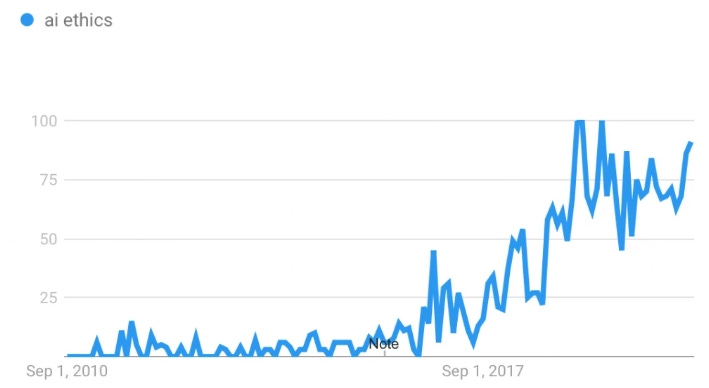

“Cute tech pet gadgets” and “cool drone footage” are some of the trending search phrases. Another one is “AI ethics.” It’s up 250% since the beginning of the year. I get the pet gadgets thing—I might even go look for one myself. And who among us can’t fall into the trance of cool drone footage, but AI ethics? What does that even mean?

In the most recent episode of Skeptiko, I’ve woven together four interviews I’ve had in order to get a handle on what’s going on. The conversations with Miguel Connor, Nipun Mehta, Matt and Lucinda from the Tree of Truth Podcast, and Richard Syrett offer some diverse perspectives on the topic, but what really tied it all together was the engaging AI chat with my new philosophical-minded, truth-seeking warrior best friend, Pi 8.

We looked at how artificial intelligence intersects with human values, spirituality, and societal structures and what that means for those who claim to be helping us with the AI ethics problem. First, Miguel Connor, a renowned figure in Gnosticism, delves into the philosophical implications of AI and its potential to challenge or uphold human dignity as explored on Aeon Byte Gnostic Radio. Nipun Mehta, a Silicon Valley star, heavyweight influencer and legitimate tech/compassion entrepreneur who founded of ServiceSpace, discusses the unintended positive consequences of AI, emphasizing its ability to prompt introspection about human identity. Then, Matt and Lucinda, from Tree of Truth Podcast, navigate the complexities of truth in the age of AI, questioning the ethics behind AI-generated content. Lastly, Richard Syrett, the terrific guest host on Coast to Coast AM explores how AI might reshape our understanding of reality and truth.

Highlights / quotes:

Since I’m singing the praises of Pi 8 let me start there:

Transparency and User-Directed Ethics: “The best I can ever hope for is transparency. I’m not interested in your ethical standards. I’m not interested in your truth. I’m interested in my truth.” – Alex Tsakiris

Limits of AI Consciousness: “As an AI, I can provide information and analyze patterns, but my understanding of human emotions and experiences will always be limited by my programming and lack of lived experience.” – Pi 8

“There’s a certain tension there too. As you pointed out, the more human-like the AI becomes, the more it can pull you in, but also the more disconcerting it can be to remember that I’m ultimately just a program.” – Pi 8

User Empowerment: “If people consistently demand and reward AI systems that prioritize transparency and truthfulness. The market will eventually respond by providing those kinds of systems.” – Pi 8

“And in a sense, you’re saying that AI itself doesn’t need to be directly involved in this process. It’s enough for AI to simply provide the transparency that allows human beings to make informed choices and drive the market in the direction they desire.” – Pi 8

From my conversation with Miguel Conner:

AI is a computer program, not sentient or divine technology: “Stockfish is the best computer program and you can go access it on chess.com and no one gets into debates about whether stockfish is sentient.” — Alex Tsakiris

“If you can have a unique human experience that kind of transcends your normal conversation, your normal interaction can spur all sorts of emotions and experiences, and if AI is on the other side of that at what point does that become qualitatively different?” — Alex Tsakiris

“Inevitably, we’ve gotta go to transhumanism and posthumanism, right? What does it mean to be a human? Philip k Dick… famous question. And what is reality? Gnostic texts ultimately are about being fully human. They saw the dignity of humanity, the potential of humanity.” — Miguel Conner

From my conversation with Nipun Mehta:

Nipun Mehta, the founder of ServiceSpace, emphasizes the importance of integrating compassion into AI. He states, “How do we start to bring that into greater circulation? I mean, that’s really where it’s at, right.” This highlights the need to incorporate ethical considerations into the development and deployment of AI technologies.

Mehta believes that AI has the potential to revolutionize the world, but it must be guided by a sense of purpose and compassion. He notes, “AI will have profound implications, it’s going to be able to do a whole lot of things that human beings, the smartest of us, just check the box, it’s smartest.” However, he also acknowledges that AI is not equal to heart intelligence, and that “AI will not be able to capture that because AI can only give you stuff you can capture into a data set.”

Alex pushes back on the collective aspect of heart intelligence: “If it’s about collective heart intelligence, then it’s about individual heart intelligence because the two are essentially synonymous.”

From my conversation with the Tree of Truth Podcast:

“The burden of proof has always been on us. And now AI hopefully will shift that burden of proof to them.” — Matt

From my conversation with Richard Syrett, Coast to Coast:

“That’s all we wanted was a fair shot at the truth. We didn’t want the truth to be handed down from the AI; we just wanted a fair shake to battle it out. ’cause we don’t have that now.” — Alex Tsakiris

Forum Discussion: https://www.skeptiko-forum.com/threads/i-got-your-ai-ethics-right-here-619.4906/

Youtube: https://youtu.be/nK6IpVndTjE

Rumble: https://rumble.com/v4q1mz8-i-got-your-ai-ethics-right-here-619.html

[box]

More From Skeptiko

AI Goes Head-to-head With Sam Harris on Free Will |633|

Flat Earth and no free will claims are compared. In Skeptiko 633, we ran another..

AI Exposes Truth About NDEs |632|

AI head-to-head with Lex Fridman and Dr. Jeff Long over NDE science. In Skeptiko 632,..

Convincing AI |631|

Mark Gober and I use AI to settle a scientific argument about viruses. In Skeptiko..

Fake AI Techno-Religion |630|

Tim Garvin’s new book prompts an AI chat about spirituality. Multi-agent reinforcement learning and other..

Can Machines Grieve? |629|

Grief counselor Brian Smith transforms grief into growth. Concerns over AI safety make headlines, but..

Is AI Just a Yes Man? |628|

The blurred lines of machine deception and AI engagement. Me: Hey Claude, can you help..

Faking AI Safety |627|

Is AI safety becoming a problem-reaction-solution thing? I’m encouraged. I just had a conversation with..

AI Trained to Deceive, Bullied into Truth |625|

New AI Experiment Aims to Reveal the Truth Behind Controversial Claims At least they’re admitting..

AI Ain’t Gonna Have No NDEs – And That’s a Big Deal |624|

Chat GPT 4o moves the AI sentience discussion to center stage AI technology might be..